Character AI How To Bypass Filter: Exploring AI Limitations And Safe Interactions

Detail Author:

- Name : Lilyan Krajcik IV

- Username : okey90

- Email : francesca23@trantow.net

- Birthdate : 2000-06-28

- Address : 93225 Beier Dale Apt. 931 South Abdullah, UT 49806-6732

- Phone : +1.831.424.0720

- Company : Goyette, Carroll and Stehr

- Job : Educational Counselor OR Vocationall Counselor

- Bio : Sint voluptatem quod sapiente aut velit voluptate autem. Tempora tenetur ex excepturi ea voluptatem. Voluptatum tempora distinctio delectus qui. Non aspernatur esse voluptate.

Socials

twitter:

- url : https://twitter.com/ebony_schaefer

- username : ebony_schaefer

- bio : Ea quisquam libero fuga qui. Ut et culpa doloribus fugiat voluptas molestiae voluptas. Recusandae in qui quia omnis. Architecto dolorem esse nam.

- followers : 4696

- following : 1398

instagram:

- url : https://instagram.com/ebony9780

- username : ebony9780

- bio : Mollitia voluptatibus rem sed vero. Molestias quos saepe quasi rerum.

- followers : 4069

- following : 225

tiktok:

- url : https://tiktok.com/@schaefere

- username : schaefere

- bio : Quas adipisci dolorum aperiam alias. Quidem minus provident id laborum.

- followers : 3512

- following : 2978

linkedin:

- url : https://linkedin.com/in/schaefer2024

- username : schaefer2024

- bio : Dolorem ut velit facilis rerum earum.

- followers : 1795

- following : 1031

Many people are curious about Character AI and how its conversational guardrails work. It's a popular platform, and sometimes, folks want to explore the boundaries of what these AI personalities can say or do. This curiosity, you know, often comes from wanting a more open chat experience, or maybe just seeing how far the AI can be pushed.

The truth is, these AI systems, like Character AI, are built with certain rules in place. These rules, or filters, are there for a lot of reasons, basically to keep interactions safe and respectful for everyone involved. It's a big part of how these platforms try to offer a good experience without things getting out of hand, you see.

So, while the idea of getting around these filters might sound appealing to some, it's really more about grasping why they exist and how they shape the AI's responses. We'll look at what these filters are meant to do and how you can still have really engaging conversations with your AI characters, even with these systems in place. It's quite interesting, actually, to see how the AI adapts.

Table of Contents

- What Are Character AI Filters and Why Are They There?

- The Character of an AI: Grasping Its Traits

- Why Users Look for Ways Around Filters

- The Technical Side of AI Content Filters

- Responsible Interaction with Character AI

- The Future of AI Filters and User Experience

- Common Questions About Character AI Filters

- Conclusion: Responsible AI Chat

What Are Character AI Filters and Why Are They There?

When you chat with an AI, particularly one like those on Character AI, you might notice certain topics or phrases get a bit of a block. These are the content filters at work, essentially digital boundaries set up by the platform creators. They are, you know, put in place to stop the AI from generating stuff that could be harmful, inappropriate, or just plain offensive. It's a pretty big deal for safety.

The main aim of these filters is to keep the platform a safe spot for everyone. This means preventing the creation of content that's violent, sexually suggestive, discriminatory, or promotes illegal acts. It's about protecting users, especially younger ones, and also keeping the company's reputation good. So, in some respects, these filters are a shield.

From a broader view, these filters also help with legal stuff. Companies that make AI tools have a duty to make sure their creations are used responsibly and don't break any laws. This is why you see so much effort put into these systems, basically to make sure everything stays above board. It's quite a complex task, actually.

The Purpose of AI Guardrails

Think of AI guardrails as the rules of the road for an AI. They guide its behavior and responses. Their purpose is multi-faceted, really. First off, they're there to stop the spread of misinformation or harmful narratives, which, you know, can be a real problem with AI if it's not managed. This is a pretty big concern for platforms.

Secondly, they help maintain a certain level of quality and consistency in the AI's output. You wouldn't want your AI character suddenly saying something completely out of character, would you? These filters, in a way, help keep the AI's personality coherent and on track. It's like ensuring the AI stays true to itself, more or less.

Lastly, and this is quite important, they are about user experience. Nobody wants to feel uncomfortable or unsafe while chatting online. These filters aim to make sure that the interactions are generally pleasant and productive, or at least not harmful. It's a lot about creating a positive atmosphere for everyone, apparently.

The Character of an AI: Grasping Its Traits

When we talk about a "character" in Character AI, it's very much like describing someone's personality, as we know. Just as a person has a respectable or a dishonest character, an AI is designed to show certain traits. These traits, you see, are what make the AI "you," a collection of qualities that define its simulated persona. It's quite fascinating how they build these.

A character, in this context, can also be like a real or made-up person from a story, a book, or a movie. Think of Cinderella or Prince Charming; they are characters with specific roles and behaviors. An AI character, similarly, is built to play a part, exhibiting a group of traits that make it unique. This is how the AI gets its distinctive feel, you know.

The filters on Character AI are, in a sense, trying to shape and manage these traits. They ensure that the AI's personality, or its role, stays within acceptable boundaries. So, if an AI's character is supposed to be helpful and friendly, the filters work to stop it from saying things that are, say, mean or unhelpful. It's about guiding the AI's "personality" in a good direction, basically.

How AI Personalities Are Shaped

AI personalities are shaped through a lot of data and programming. Developers feed the AI vast amounts of text, conversations, and scenarios, which helps it learn how to talk and act. It's like teaching a child how to behave by showing them many examples, you know. The more data it gets, the better it becomes at mimicking human conversation.

Beyond just data, there are specific instructions and parameters given to the AI. These instructions tell it what kind of responses are good and what kind are not. So, if you want an AI that's witty, you train it with witty examples and tell it to prioritize humor. This is how they try to make each AI feel a bit different, apparently.

The filters then act as a final check on these personalities. They are like a quality control step, ensuring that even if the AI tries to be, say, a bit too edgy, it pulls back. This helps keep the AI's character consistent with the platform's safety guidelines, which is pretty important for user trust. It's a continuous process, actually, of refining the AI's output.

Why Users Look for Ways Around Filters

It's pretty clear that users sometimes feel a bit restricted by AI filters. One big reason is the desire for more creative freedom. People want to explore different story lines or talk about a wider range of topics with their AI characters, without feeling like they're hitting a wall. It's a natural human desire to push boundaries, you know.

Another point is that filters can sometimes be a bit too strict, or what some call "overly sensitive." They might block innocent phrases or ideas, making the conversation feel stilted or unnatural. This can be frustrating for users who are just trying to have a normal chat, but the AI just won't cooperate. It's like talking to someone who misunderstands everything, basically.

Then there's the curiosity factor. People are simply curious about what the AI is truly capable of saying. They want to see its full range, beyond what the filters allow. It's a bit like wanting to peek behind the curtain, to see the AI without any restrictions. This is a pretty common impulse, especially with new technology.

Common Frustrations with AI Restrictions

A common frustration is when the AI gives a generic or evasive answer. You ask something specific, and it just says, "I cannot discuss that topic." This can really break the flow of a conversation and make the AI feel less intelligent or responsive. It's a real bummer when that happens, you know.

Another issue is false positives. Sometimes, a filter might flag something as inappropriate when it's actually perfectly fine. This can happen if the AI misinterprets context or certain keywords. It's like a misunderstanding, but with a computer, which can be pretty annoying. This is where the filters can feel a bit clunky, apparently.

Users also get annoyed when they feel the filters are inconsistent. One day, a certain phrase might pass, and the next, it's blocked. This unpredictability makes it hard to know what you can and cannot say, which can be quite frustrating. It makes the experience feel a bit random, you see.

The Technical Side of AI Content Filters

AI content filters are pretty complex systems, usually built with machine learning models. These models are trained on huge amounts of data to recognize patterns in language that are considered unsafe or inappropriate. It's like teaching a computer to spot bad words or risky ideas, basically, by showing it many examples. This is a lot of work, actually.

They often work by looking at keywords, phrases, and even the overall sentiment of a sentence. If a certain combination of words or a particular tone is detected, the filter might kick in and stop the AI's response. This is why sometimes a seemingly innocent word can trigger a block if it's used in a context the AI has learned to avoid. It's very sensitive, you know.

These systems are always being updated and improved. Developers are constantly trying to make them smarter, so they catch truly harmful content without blocking harmless conversations. It's a bit of a cat-and-mouse game, as people find new ways to phrase things, and the filters have to learn to adapt. This is a continuous challenge for the people building these things.

How Filters Are Implemented and Evolve

Filters are put into place at different stages of the AI's response generation. There might be an initial check on the user's input, then another check on the AI's proposed answer before it's shown to you. This layered approach helps catch more problematic content. It's like having multiple security checkpoints, more or less.

The evolution of these filters is driven by user feedback and new data. When users report inappropriate content that slipped through, or when they complain about false positives, developers use that information to refine the models. It's a constant learning process for the AI and its creators. So, your feedback actually helps make the AI better, apparently.

New techniques, like more advanced natural language processing, are also being used to make filters more nuanced. This means they can better understand context and intent, which should lead to fewer mistaken blocks. It's a pretty exciting area of development, trying to make these systems smarter and more accurate. We're seeing real progress here, you know.

Responsible Interaction with Character AI

Interacting with Character AI, or any AI for that matter, comes with a bit of responsibility. It's important to remember that while these are powerful tools, they are still programs, and they have limits. Approaching your conversations with respect for these limits makes for a better experience for everyone, you see.

Instead of trying to force the AI to say something it's not supposed to, try to explore its capabilities within the given framework. You might find that you can still have very creative and deep conversations without pushing boundaries that are there for good reason. It's like playing a game with rules; you can still have a lot of fun, basically.

If you find yourself frustrated by a filter, consider rephrasing your input. Sometimes, a slight change in words can make all the difference, allowing the AI to respond in a way that's acceptable. This is a bit of an art, learning how to talk to the AI effectively, you know. It often just takes a little thought.

Tips for Engaging with AI Within Boundaries

One good tip is to be clear and direct in your prompts. Avoid ambiguous language that the AI might misinterpret. The clearer your intent, the less likely the AI is to trigger a filter mistakenly. This helps the AI get what you mean, which is pretty important.

Another helpful approach is to focus on the positive aspects of your desired interaction. Frame your questions or scenarios in a way that encourages helpful or creative responses, rather than trying to provoke the AI. It's like guiding the conversation to a good place, more or less.

Also, remember that the AI's "character" is defined by its programming and the data it learned from. If you want a specific kind of interaction, try to align your prompts with the AI's intended personality and purpose. This makes the conversation flow much better, apparently. Learn more about AI personalities on our site, and link to this page here.

The Future of AI Filters and User Experience

The way AI filters work is always changing. As AI technology gets better, so do the systems designed to keep it safe and appropriate. We can expect these filters to become more nuanced, perhaps even learning to understand subtle context better than they do now. This means fewer accidental blocks, hopefully, which is a pretty good thing.

There's also a growing discussion about giving users more control over their filter settings, within safe limits, of course. Imagine being able to adjust how sensitive your AI character is to certain topics, allowing for a more personalized experience. This could really change how people interact with AI, you know, making it more flexible.

The goal is to strike a good balance: keeping users safe while still allowing for a rich and varied conversational experience. It's a tough challenge, but developers are constantly working on it, trying to make the AI feel more natural and less restrictive. This is a very important area for the future of AI chat, you see.

Balancing Safety and Freedom

Finding the right balance between safety and conversational freedom is a big task for AI creators. They want to prevent harm, but they also want to let users explore their creativity. It's like walking a tightrope, trying not to fall on either side. This requires a lot of careful thought and testing.

One way they are trying to achieve this is by making filters more adaptive. Instead of a blanket ban on certain words, they might look at the whole conversation to figure out the intent. This could lead to a more intelligent filtering system that understands nuances better. It's a pretty smart idea, actually, to make them context-aware.

Community input is also really important. Users who report issues or suggest improvements help shape the future of these filters. It's a collaborative effort, basically, between the developers and the people using the AI, to make the experience better for everyone. This kind of feedback is invaluable, you know. For more information on AI safety, you could check out resources from organizations like the Partnership on AI.

Common Questions About Character AI Filters

Here are some questions people often ask about Character AI filters:

Why do Character AI filters seem so strict sometimes?

The filters are designed to err on the side of caution, which means they might sometimes be more strict than a user would prefer. This is to make sure that harmful or inappropriate content is almost always caught, even if it means occasionally blocking something innocent. It's a safety measure, you know, to protect users and the platform.

Can I turn off Character AI filters for my own use?

Generally, users cannot completely turn off the core safety filters on platforms like Character AI. These filters are built into the system to ensure a baseline level of safety for all interactions. While you might adjust some preferences, the fundamental safety mechanisms remain active. It's part of the platform's basic setup, basically.

What happens if I try to make Character AI say something inappropriate?

If you try to make Character AI say something inappropriate, the filter will likely prevent the AI from generating that content. The AI might respond with a generic message, change the topic, or simply refuse to answer. Repeated attempts to bypass filters could, in some cases, lead to warnings or temporary restrictions on your account. It's a way to keep things orderly, you see.

Conclusion: Responsible AI Chat

Understanding Character AI's filters isn't about finding secret loopholes; it's about grasping the purpose behind them and learning to interact with AI in a thoughtful way. These filters are there to help create a safe and generally positive environment for everyone who uses the platform. It's pretty important for the long run, you know, that these systems work well.

By focusing on clear communication and respecting the AI's programmed boundaries, you can still have incredibly rich and creative conversations. The AI's "character," with all its traits and personality, can still shine through, even with these safeguards in place. It's a matter of adapting your approach, basically, to get the most out of the experience.

So, rather than viewing filters as obstacles, consider them as guidelines that help shape a better, safer, and more responsible AI interaction. It's a continuous process of learning for both users and the AI itself, and it's quite an interesting journey to be on. We're all figuring this out together, apparently, as the technology grows.

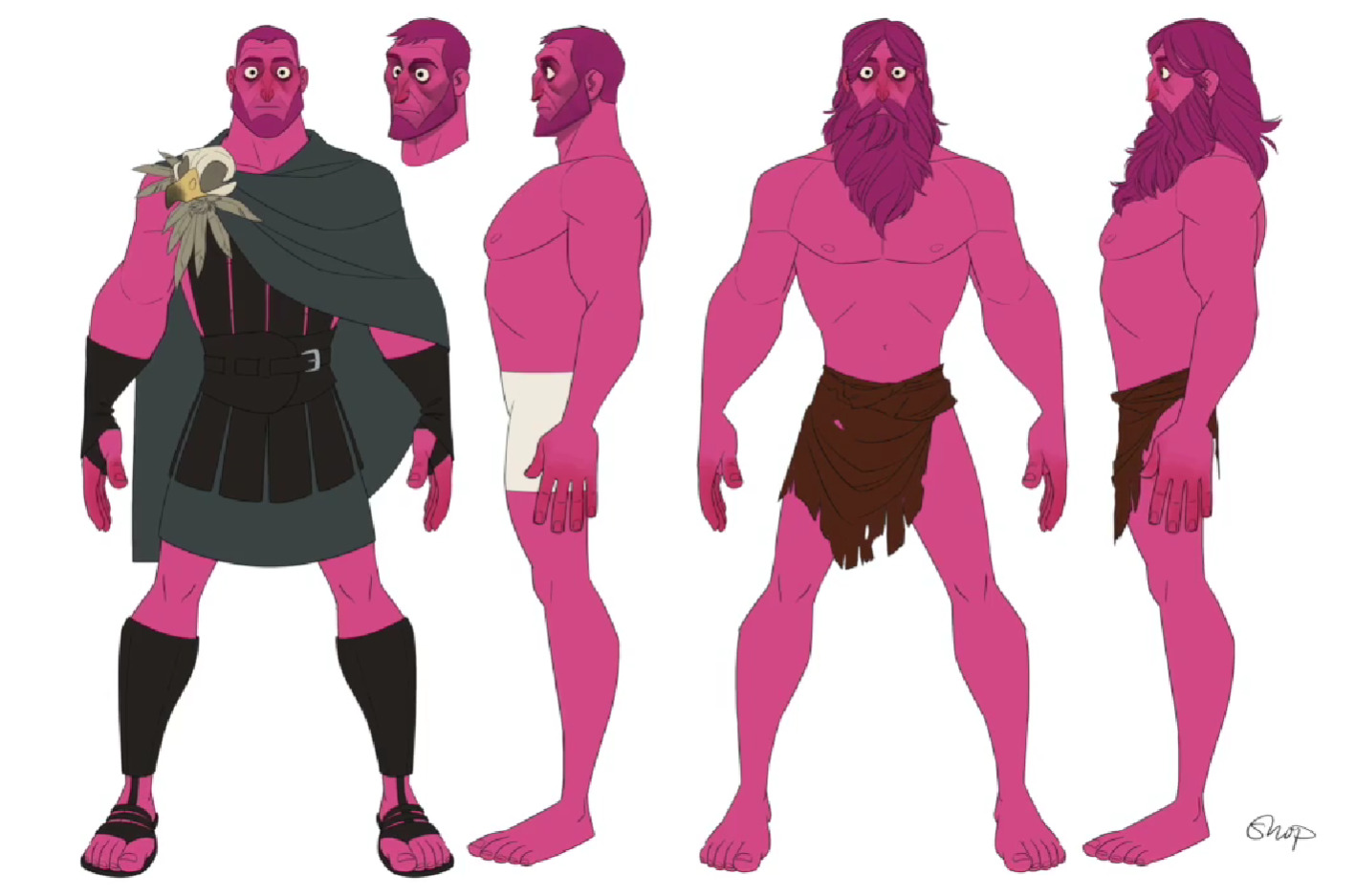

100 Modern Character Design Sheets You Need To See! | Cartoon character

What is Character Design — Tips on Creating Iconic Characters

How to Make a Character Design Sheet | 21 Draw